Sky & Telescope

Most ways of counting and measuring things work logically. When the thing that you're measuring increases, the number gets bigger. When you gain weight, after all, the scale doesn't tell you a smaller number of pounds or kilograms. But things are not so sensible in astronomy — at least not when it comes to the brightnesses of stars. Enter the Stellar Magnitude System.

Ancient Origins of The Stellar Magnitude System

Star magnitudes do count backward, the result of an ancient fluke that seemed like a good idea at the time. The story begins around 129 B.C., when the Greek astronomer Hipparchus produced the first well-known star catalog. Hipparchus ranked his stars in a simple way. He called the brightest ones "of the first magnitude," simply meaning "the biggest." Stars not so bright he called "of the second magnitude," or second biggest. The faintest stars he could see he called "of the sixth magnitude." Around A.D. 140 Claudius Ptolemy copied this system in his own star list. Sometimes Ptolemy added the words "greater" or "smaller" to distinguish between stars within a magnitude class. Ptolemy's works remained the basic astronomy texts for the next 1,400 years, so everyone used the system of first to sixth magnitudes. It worked just fine.

Galileo forced the first change. On turning his newly made telescopes to the sky, Galileo discovered that stars existed that were fainter than Ptolemy's sixth magnitude. "Indeed, with the glass you will detect below stars of the sixth magnitude such a crowd of others that escape natural sight that it is hardly believable," he exulted in his 1610 tract Sidereus Nuncius. "The largest of these . . . we may designate as of the seventh magnitude." Thus did a new term enter the astronomical language, and the stellar magnitude system became open-ended. There could be no turning back.

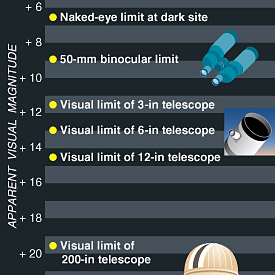

As telescopes got bigger and better, astronomers kept adding more magnitudes to the bottom of the scale. Today a pair of 50-millimeter binoculars will show stars of about 9th magnitude, a 6-inch amateur telescope will reach to 13th magnitude, and the Hubble Space Telescope has seen objects as faint as 31st magnitude.

By the middle of the 19th century, astronomers realized there was a pressing need to define the entire scale of the stellar magnitude system more precisely than by eyeball judgment. They had already determined that a 1st-magnitude star shines with about 100 times the light of a 6th-magnitude star. Accordingly, in 1856 the Oxford astronomer Norman R. Pogson proposed that a difference of five magnitudes be exactly defined as a brightness ratio of 100 to 1. This convenient rule was quickly adopted. One magnitude thus corresponds to a brightness difference of exactly the fifth root of 100, or very close to 2.512 — a value known as the Pogson ratio.

| The Meaning of Magnitudes | |

|---|---|

| This difference in magnitude... | ...means this ratioin brightness |

| 0 | 1 to 1 |

| 0.1 | 1.1 to 1 |

| 0.2 | 1.2 to 1 |

| 0.3 | 1.3 to 1 |

| 0.4 | 1.4 to 1 |

| 0.5 | 1.6 to 1 |

| 1.0 | 2.5 to 1 |

| 2 | 6.3 to 1 |

| 3 | 16 to 1 |

| 4 | 40 to 1 |

| 5 | 100 to 1 |

| 10 | 10,000 to 1 |

| 20 | 100,000,000 to 1 |

Fifty-eight magnitudes of apparent brightness encompass the things that astronomers study, from the glaring Sun to the faintest objects detected with the Hubble Space Telescope. This range is equivalent to a brightness ratio of some 200 billion trillion.

Sky & Telescope

But the scientific world in the 1850s was gaga for logarithms, so now they are locked into the stellar magnitude system as firmly as Hipparchus's backward numbering.

Now that star magnitudes were ranked on a precise mathematical scale, however ill-fitting, another problem became unavoidable. Some "1st-magnitude" stars were a whole lot brighter than others. Astronomers had no choice but to extend the scale out to brighter values as well as faint ones. Thus Rigel, Capella, Arcturus, and Vega are magnitude 0, an awkward statement that sounds like they have no brightness at all! But it was too late to start over. The magnitude scale extends farther into negative numbers: Sirius shines at magnitude –1.5, Venus reaches –4.4, the full Moon is about –12.5, and the Sun blazes at magnitude –26.7.

Other Colors, Other Magnitudes

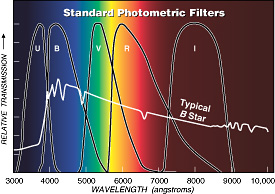

The bandpasses of the standard UBVRI color filters, along with the spectrum of a typical blue-white star.

Sky & Telescope

By the late 19th century astronomers were using photography to record the sky and measure star brightnesses, and a new problem cropped up. Some stars showing the same brightness to the eye showed different brightnesses on film, and vice versa. Compared to the eye, photographic emulsions were more sensitive to blue light and less so to red light. Accordingly, two separate scales for the stellar magnitude system were devised. Visual magnitude, or mvis, described how a star looked to the eye. Photographic magnitude, or mpg, referred to star images on blue-sensitive black-and-white film. These are now abbreviated mv and mp, respectively.

This complication turned out to be a blessing in disguise. The difference between a star's photographic and visual magnitude was a convenient measure of the star's color. The difference between the two kinds of magnitude was named the "color index." Its value is increasingly positive for yellow, orange, and red stars, and negative for blue ones.

But different photographic emulsions have different spectral responses! And people's eyes differ too. For one thing, your eye lenses turn yellow with age; old people see the world through yellow filters. Magnitude systems designed for different wavelength ranges had to be more clearly defined than this.

Today, precise magnitudes are specified by what a standard photoelectric photometer sees through standard color filters. Several photometric systems have been devised; the most familiar is called UBV after the three filters most commonly used. U encompasses the near-ultraviolet, B is blue, and V corresponds fairly closely to the old visual magnitude; its wide peak is in the yellow-green band, where the eye is most sensitive.

Color index is now defined as the B magnitude minus the V magnitude. A pure white star has a B-V of about 0.2, our yellow Sun is 0.63, orange-red Betelgeuse is 1.85, and the bluest star believed possible is –0.4, pale blue-white.

So successful was the UBV system that it was extended redward with R and I filters to define standard red and near-infrared magnitudes. Hence it is sometimes called UBVRI. Infrared astronomers have carried it to still longer wavelengths, picking up alphabetically after I to define the J, K, L, M, N, and Q bands. These were chosen to match the wavelengths of infrared "windows" in the Earth's atmosphere — wavelengths at which water vapor does not entirely absorb starlight.

In all wavebands, the bright star Vega has been chosen (arbitrarily) to define magnitude 0.0. Since Vega is dimmer at infrared wavelengths than in visible light, infrared magnitudes are, by definition and quite artificially, "brighter" than their visual counterparts.

Appearance and Reality

What, then, is an object's real brightness? How much total energy is it sending to us at all wavelengths combined, visible and invisible? The answer is called the bolometric magnitude, mbol, because total radiation was once measured with a device called a bolometer. The bolometric magnitude has been called the God's-eye view of an object's true luster. Astrophysicists value it as the true measure of an object's total energy emission as seen from Earth. The bolometric correction tells how much brighter the bolometric magnitude is than the V magnitude. Its value is always negative, because any star or object emits at least some radiation outside the visual portion of the electromagnetic spectrum.

Up to now we've been dealing only with apparent magnitudes — how bright things look from Earth. We don't know how intrinsically bright an object is until we also take its distance into account. Thus astronomers created the absolute magnitude scale. An object's absolute magnitude is simply how bright it would appear if placed at a standard distance of 10 parsecs (32.6 light-years).

On the left-hand map of Canis Major, dot sizes indicate stars' apparent magnitudes; the dots match the brightnesses of the stars as we see them. The right-hand version indicates the same stars' absolute magnitudes — how bright they would appear if they were all placed at the same distance (32.6 light-years) from Earth. Absolute magnitude is a measure of true stellar luminosity.

Sky & Telescope

Seen from this distance, the Sun would shine at an unimpressive visual magnitude 4.85. Rigel would blaze at a dazzling –8, nearly as bright as the quarter Moon. The red dwarf Proxima Centauri, the closest star to the solar system, would appear to be magnitude 15.6, the tiniest little glimmer visible in a 16-inch telescope! Knowing absolute magnitudes makes plain how vastly diverse are the objects that we casually lump together under the single word "star."

Absolute magnitudes are always written with a capital M, apparent magnitudes with a lower-case m. Any type of apparent magnitude — photographic, bolometric, or whatever — can be converted to an absolute magnitude.

(For comets and asteroids, a very different "absolute magnitude" is used. The standard here is how bright the object would appear to an observer standing on the Sun if the object were one astronomical unit away.)

So, is the stellar magnitude system too complicated? Not at all. It has grown and evolved to fill every brightness-measuring need exactly as required. Hipparcus would be thrilled.

2

2

Comments

smithn00

January 12, 2018 at 1:29 pm

But why didn't Hipparchus use Sirius as the "base" star?

You must be logged in to post a comment.

Raymond

April 26, 2020 at 3:15 pm

Good question. But then Sirius would be the only 1st magnitude star.

You must be logged in to post a comment.

You must be logged in to post a comment.